In conclusion: The growing AI content pollution problem

Why do the large language models of artificial intelligence love gobbledygook so much?

Artificial intelligence tools like ChatGPT makes content creation super easy. However, garbage web content has quickly become a huge and growing problem. Let’s call it what it is: AI pollution.

New technologies often lead from utility to saturation to pollution. The use of plastic bottles, for example, made it easy to purchase drinks but led environmental disasters, including the 1.6m sq km Great Pacific garbage patch.

Electric light has delivered many benefits to humans, however light pollution has profoundly affected the ways that birds, animals, plants, and insects react to their rapidly changing environment. In the Boston suburbs where I live, because of light pollution, I need to travel more than two hours to see the Milky Way galaxy, a sight that humans could see from anywhere just a few generations ago.

Now the same thing is happening with the output of large language model (LLM) chatbot tools like ChatGPT. These models are spewing garbage content that is polluting the web.

AI Pollution

In a New York Times guest essay, Erik Hoel cites a study titled Monitoring AI-Modified Content at Scale: A Case Study on the Impact of ChatGPT on AI Conference Peer Reviews. In it, the authors present a “case study of scientific peer review in AI conferences that took place after the release of ChatGPT.” The results “suggest that between 6.5% and 16.9% of text submitted as peer reviews to these conferences could have been substantially modified by LLMs, ie beyond spell-checking or minor writing updates.”

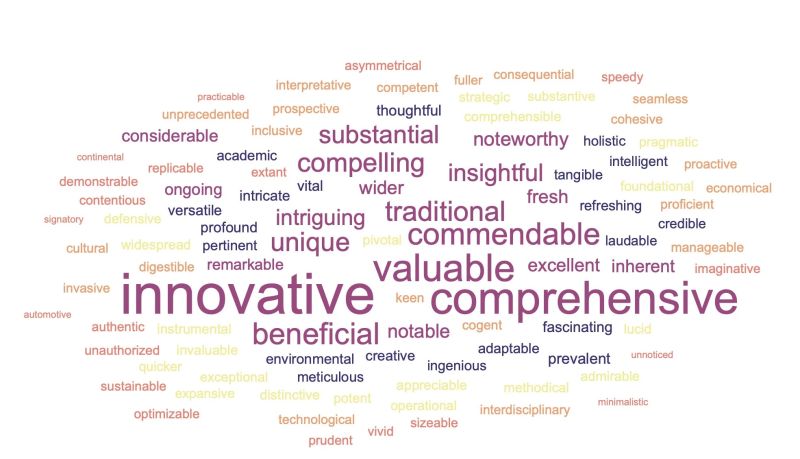

A clue to such AI created content is the use of what I call gobbledygook, the flexible, scalable, innovative, best-of-breed, cutting-edge nonsense words that people insert into their writing in an attempt to look smart.

It turns out the LLMs love gobbledygook! The 'content at scale’ authors cited words such as “innovative”, “commendable”, “meticulous”, and “intricate” appearing much more often in articles since the release of ChatGPT.

I’ve been informally studying the phenomenon of AI pollution myself. I have a Google alert set up for the word newsjacking - the art and science of injecting your ideas into a breaking news story to generate tons of media coverage, get sales leads, and grow business - a marketing and PR technique I pioneered more than a decade ago.

I’m delighted that newsjacking quickly became a technique used by thousands of marketers, and that the word is included in the Oxford English Dictionary. And I enjoy seeing what others have to say about newsjacking.

In recent months, I’ve noticed an increase in the number of articles about newsjacking that appear on agency and consultant websites. Some are original content, but most are ChatGPT created dreck.

In conclusion

I’ve found an important clue to figuring out if a blog post or web page is ChatGPT created. Scroll to the bottom and see if “In conclusion” or “Conclusion” is used at the end of the article.

You don’t need to tell your reader that your article is ending! We get it. However, the LLM models must have been trained on academic content that include a conclusion because ChatGPT seems to always end articles with “Conclusion” or “In conclusion”.

I suspect that just like other forms of pollution, there will soon be a clamour to clean up AI pollution. My hope is the search engines will sniff out AI written content and refuse to index it.

David Meerman Scott is an internationally acclaimed marketing strategist, global keynote speaker and author. Read the original post.

.jpg&w=728&h=90&maxW=&maxH=&zc=1)